Table of Contents

Model Values

Overview

The Model Values report provides details on the results of the AFM (Additive Factor Model) algorithm1, a logistic regression performed over the “error rate” learning curve data. The AFM logistic regression, a standard regression bounded between 0 and 1, attempts to find the best-fit curve for error-rate data, which also ranges between 0 and 1.

The Model Values page presents a quantitative analysis of how well, given the selected knowledge component model, the AFM statistical model fits the data (via AIC, BIC, log likelihood) and how well it might generalize to an independent dataset from the same tutor (via cross validation RMSE).

Using R notation, the AFM model (applied to a modified student-step export file called "ds") can be approximately* represented as:

R> L = length(ds$Anon.Student.Id)

R> success = vector(mode="numeric", length=L)

R> success[ds$First.Attempt=="correct"]=1

R> model1.lmer <- lmer(success~knowledge_component+ knowledge_component:opportunity+(1|anon_student_id),data=ds,family=binomial())

Note: The success variable must be 0 or 1. The first three R commands simply convert the "First Attempt" values (in the student-step export) of "incorrect" and "hint" to 0, and "correct" to "1".

* The AFM code is different from the R expression above in two ways:

- To reduce over-fitting the data, AFM assumes learning cannot be negative and thus constraints the

"slope" estimates of the

knowledge_component:opportunityparameters to be greater or equal to 0.

- The optimization applies a penalty to estimates of the student parameters (

anon_student_id) for deviating from 0—essentially treatinganon_student_idas a random effect.

AFM is a generalization of the log-linear test model (LLTM)2. It is a specific instance of logistic regression, with student-success (0 or 1) as the dependent variable and with independent variable terms for the student, the KC, and the KC by opportunity interaction. Without the third term (KC by opportunity), AFM is LLTM. If the KC Model is the Unique-Step model (and there is no third term), the model is Item Response Theory, or the Rasch model.

For KC models that contain steps coded with multiple skills, Datashop implements a compensatory sum across all of the KCs of the multi-skilled steps, for both the KC intercept and slope. The sum is "conjunctive" when two (or more) parameter estimates are negative, i.e. performance is predicted to be worse when the KCs are combined than when they are alone. The sum is "disjunctive" when the parameter estimates are positive, i.e. performance is predicted to be better when KCs are combined than when they are alone. If one estimate is positive and one negative, the sum is "compensatory" like an average.

AFM is run for all datasets in DataShop, once for each knowledge component model of a dataset. For large datasets, AFM will not run. "Large", in this case, is a function of the number of transactions, students, and KCs—a dataset with more than 300,000 transactions, 250 students, and 300 KCs will prevent AFM from running successfully. The current workaround for this limitation is to create a smaller dataset with a subset of the data.

Values

Based on the knowledge component model and the observed data, the following AFM model values are calculated:

KC Model Values

- AIC: The Akaike information criterion (AIC) is a measure of the goodness of fit of a statistical model, in this case, the AFM model. It is an operational way of trading off the complexity of the estimated model against how well the model fits the data3. In this way, it penalizes the model based on its complexity (the number of parameters). A lower AIC value is better.

- BIC: The Bayesian information criterion (BIC) is also a measure of goodness of fit of the AFM model. The BIC penalizes free parameters more strongly than does the Akaike information criterion (AIC)4. A lower BIC value is better.

- Log Likelihood: a basic fit parameter used in calculating both AIC and BIC; also referred to as the log likelihood ratio. Unlike AIC and BIC, log likelihood assumes the model includes the right number of parameters; AIC and BIC take into account that the parameters of the model could be wrong both in number and value.

- Number of Parameters: the total number of parameters being fit by the AFM statistical model. This number will vary with the number of knowledge components in the knowledge component model, as well as the number of students for which there is data in the dataset.

- Number of Observations: the total number of observations used by the AFM statistical model.

Cross Validation Values

Cross validation is a technique for assessing how well the results of a statistical model (in this case, AFM for a particular KC model) will generalize to an independent dataset from the same tutor. It's reported as root mean squared error (RMSE). Lower values of RMSE indicate a better fit between the model's predictions and the observed data.

Three types of cross validation are run for each KC model in the dataset. All types are a 3-fold cross validation of the Additive Factor Model's (AFM) error rate predictions.

- Student blocked. With data points grouped by student, the full set of students is divided into 3 groups. 3-fold cross validation is then performed across these 3 groups.

- Item blocked. With data points grouped by step, the full set of steps is divided into 3 groups. 3-fold cross validation is then performed across these 3 groups.

- Unblocked. The full set of data points is divided into 3 groups, irrespective of student or step. 3-fold cross validation is then performed across these 3 groups.

For item blocked cross validation, the system optimizes data division so that all KCs appear in training sets at maximal possibility.

For unblocked cross validation, each student and each KC must appear in at least two observations; otherwise that student or KC are excluded from cross validation. This procedure ensures that all students and all KCs appear in both training and testing sets. However, the dropping of data points affects the following two values:

- Number of Parameters: the total number of parameters used in cross validation. This number can differ from the number of parameters in the AFM model if there are not enough observations for a student or KC. See the cross validation note above.

- Number of Observations: the total number of observations used in cross validation. This number can differ from the number of observations used by the AFM model if there are not enough observations for a student or KC. See the unblocked cross validation note above.

After taking into account the exclusion criteria described above, the following data requirements must be met for cross validation to run:

| Cross Validation Type | Requirement |

|---|---|

| Student blocked | at least 3 students |

| Item blocked | at least 3 steps |

| Unblocked | at least 3 students and 3 KCs |

KC Values

- Intercept (logit) at Opportunity 1: a parameter representing knowledge component difficulty, where higher values indicate an easier knowledge component and lower values indicate a more difficult KC.

- Intercept (probability) at Opportunity 1: derived from the intercept (logit). A parameter representing knowledge component difficulty, where higher values indicate an easier knowledge component and lower values indicate a more difficult KC.

- Slope: a parameter representing of how quickly students will learn the knowledge component. The larger the KC slope, the faster students learn the knowledge component.

Student Values

- Intercept: a parameter representing a student's initial knowledge. The higher the student intercept, the more the student initially knew.

To view model values for a different knowledge component model:

- Select a different knowledge component model from the drop-down menu under Knowledge Component Models. The Model Values report will update to show values for that model.

Tip: To learn how to define a new knowledge component model, see KC Models.

To export model values:

- Click the button labeled Export Model Values. You will be prompted to save the exported text file.

Model Equation

Predicted success rate is the probability of the student being correct on the first try (no hint request or incorrect action) on a step.

where

- Υij = the response of student i on item j

- θi = coefficient for proficiency of student i

- βk = coefficient for difficulty of knowledge component k

- γk = coefficient for the learning rate of knowledge component k

- Τik = the number of practice opportunities student i has on the knowledge component k

and

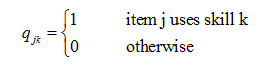

- Κ = the total number of knowledge components in the Q-matrix

Note:

- The Τik parameter estimate (the number of practice opportunities student i has had on the knowledge component k) is constrained to be greater or equal to 0.

- User proficiency parameters (θi) are fit using a Penalized Maximum Likelihood Estimation method (PMLE) to overcome over fitting. User proficiencies are seeded with normal priors and PMLE penalizes the oversized student parameters in the joint estimation of the student and the skill parameters.

The intuition of this model is that the probability of a student getting a step correct is proportional to the amount of required knowledge the student knows, plus the "easiness" of that knowledge component, plus the amount of learning gained for each practice opportunity.

The term "Additive" comes from the fact that a linear combination of knowledge component parameters determines logit(pij) in the equation. For more information on the Additive Factor Model, see Hao Cen's PhD Thesis5.

1 Cen, H., Koedinger, K. R., and Junker, B. 2007. Is Over Practice Necessary? --Improving Learning Efficiency with the Cognitive Tutor through Educational Data Mining. In Proceeding of the 2007 Conference on Artificial intelligence in Education: Building Technology Rich Learning Contexts that Work R. Luckin, K. R. Koedinger, and J. Greer, Eds. Frontiers in Artificial Intelligence and Applications, vol. 158. IOS Press, Amsterdam, The Netherlands, 511-518. PDF

Cen, H., Koedinger, K., and Junker, B. 2008. Comparing Two IRT Models for Conjunctive Skills. In Proceedings of the 9th international Conference on intelligent Tutoring Systems (Montreal, Canada, June 23 - 27, 2008). B. P. Woolf, E. Aïmeur, R. Nkambou, and S. Lajoie, Eds. Lecture Notes In Computer Science. Springer-Verlag, Berlin, Heidelberg, 796-798. DOI=http://dx.doi.org/10.1007/978-3-540-69132-7_111

2 Wilson, M., & De Boeck, P. (2004). Descriptive and explanatory item response models. In P. De Boeck, & M. Wilson, (Eds.) Explanatory item response models: A generalized linear and nonlinear approach. New York: Springer-Verlag.

3 Akaike information criterion. (2007, February 25). In Wikipedia, The Free Encyclopedia. Retrieved 16:22, March 7, 2007, from http://en.wikipedia.org/w/index.php?title=Akaike_information_criterion&oldid=110898239

4 Bayesian information criterion. (2007, February 19). In Wikipedia, The Free Encyclopedia. Retrieved 16:29, March 7, 2007, from http://en.wikipedia.org/w/index.php?title=Bayesian_information_criterion&oldid=109323430

5 Cen, H. (2009). Generalized Learning Factors Analysis: Improving Cognitive Models with Machine Learning. PDF